Content Drive — How we organize and share billions of files in Netflix studio

by Esha Palta, Ankur Khetrapal, Shannon Heh, Isabell Lin, Shunfei Chen

Introduction

Netflix has pioneered the idea of a Studio in the Cloud, giving artists the ability to work from different corners of the world to create stories and assets to entertain the world. Starting at the point of ingestion where data is produced out of the camera, it goes through many stages, some of which are shown below. The media undergoes comprehensive backup routines at every stage and phase of this process with frequent uploads and downloads. In order to support these processes and studio applications, we need to provide a distributed, scalable, and performant media cloud storage infrastructure.

Shifting gears towards assets storage, all these media files are securely delivered and stored within Amazon Simple Storage Service (S3) . Netflix maintains an identity of all these objects to be addressed by storage infrastructure layers along with other essential metadata about these objects.

At the edge, where artists work with assets, the artist applications and the artists themselves expect a file/folder interface so that there can be seamless access to these files without having agents for translating these files — we want to make working with studio applications a seamless experience for both our artists. This is not just restricted to artists, but also studio workflows. A great example is asset transformations that happen during the rendering of content.

We needed a system that could provide the ability to store, manage, and track billions of these media objects while keeping a familiar file/folder interface that lets users upload freeform files and provide management capabilities such as create, update, move, copy, delete, download, share, and fetch arbitrary tree structures.

In order to do this effectively, reliably, and securely to meet the requirements of a cloud-managed globally distributed studio, our media storage platform team has built a highly scalable metadata storage service — Content Drive.

Content Drive (or CDrive) is a cloud storage solution that provides file/folder interfaces for storing, managing, and accessing the directory structure of Netflix’s media assets in a scalable and secure way. It empowers applications such as Content Hub UI to import media content (upload to S3), manage its metadata, apply lifecycle policies, and provide access control for content sharing.

In this post we will share an overview of the CDrive service.

Features

- Storing, managing and tracking billions of files and folders while retaining folder structure. Provide a familiar Google Drive-like interface which lets users upload freeform files and provide management capabilities such as create, update, move, copy, delete, download, share, and fetch arbitrary tree structures.

- Provide access control for viewing, uploads and downloads of files and folders.

- Collaboration/Sharing — share work-in-progress files.

- Data transfer manifest and token generation — Generate download manifest and tokens for requested files/folders after verifying authorization.

- Files/folders notifications — Provide change notifications for files/folders. This enables live sharing and collaboration use cases in addition to supporting dependent backend applications to complete their business workflows around data ingestion.

Architecture

CDrive Components

- REST API and DGS (GraphQL) layer that provides endpoints to create/manage files/folders, manage shares for files/folders, and get authorization tokens for files/folders upload/download.

- CDrive service layer that does the actual work of creating and managing tree structure (implements operations such as create, update, copy, move, rename, delete, checksum validation, etc on files/folder structures).

- Access control layer that provides user and application-based authorization for files/folders managed in CDrive.

- Data Transfer layer that proxies requests to other services for transfer tracking and transfer token generation after authorization.

- Persistence layer that performs the metadata reads and updates in transactions for files/folders managed in CDrive.

- Event Handler that produces event notifications for users and applications to consume and take action. For example, CDrive generates an event on upload completion for a folder.

Fig 3 shows a sample CDrive usage example. We can see that different users access workspaces based on their user credentials. Users can perform all file/folder-level operations on data present in their workspaces and upload/download files/folders into their workspaces.

Design and Concepts

CDrive stores and manages files and folder metadata in hierarchical tree structures. It allows users and applications to group files into folders and files/folders into workspaces and supports features like create/update/delete/move/copy etc.

The tree structures belong to individual workspaces (partitions) and contain folders as branches and files as leaf nodes (a folder can also be a leaf node).

CDrive uses CockroachDB to store its metadata and directory structure. There are a few reasons why we chose CockroachDB:

- To provide a strong consistency guarantee on operations. The type and correctness of data are very important. CDrive maintains an invariant of a unique file path for each file/folder. This means at any point in time a file path will represent a unique CDrive node.

- Need for complex queries. CDrive needs to support a variety of complex file/folder operations such as create/merge/copy/move/updateMetadata/bulkGet etc., which requires a persistence layer to perform join queries in an optimized way.

- Need for distributed transactions. CockroachDB provides distributed transaction support with its internal sharded architecture. CDrive data modeling enables it to perform metadata operations in a very efficient way.

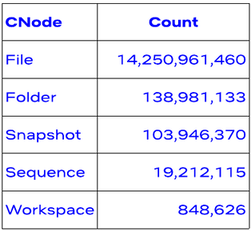

CNode

Each file, folder or workspace is represented by a node structure in CDrive. A file path always points to a unique CNode. This means any metadata operation that modifies the file path results in new CNode getting generated and older ones moving to the deleted status. For example: every time an artist copies a file, CDrive creates a new CNode for that file path.

A CDrive node can be of the following types -

- Root/Workspace: This is the top-level partition for creating a file/folder hierarchy per application and project using CDrive. It is analogous to the disk partition on the OS.

- Folder: A container for other containers or files.

- File: A leaf node that has a reference to a data location this CNode represents.

- Sequence: A special container folder for file sequences. Sequence is a special container type in CDrive that can contain millions of files under it. This is created to represent special media files such as off-camera footage, which has a range of frames that form a small clip. All frames/files in a Sequence start with the same prefix and suffix but differ in the frame number, e.g. frame.##.JPG. A sequence can contain arbitrary lists of frame ranges (start index and end index). The sequence can provide a summary of millions of frames without looking into each file. CDrive provides APIs with the option to expand the sequence on a get operation. Whenever a folder is uploaded, the CDrive server inspects the folder to look for sequence files and groups them into a sequence.

- Snapshot: A Snapshot is a special container CNode that guarantees its subtree is immutable. The immutable subtree is a shallow copy of metadata from a folder that is not immutable. Typically applications create a snapshot to “lock” files/folders from further mutations to represent an asset.

CNode Metadata

Each CNode contains the attributes associated with that node. The minimum metadata present with each node is UUID, Name, Parent Id, Path, Size, Checksums, Status, and Data location. For efficiency, CNode also contains its directory path (in terms of node UUIDs as well as filename path).

Parent-Child Hierarchy

All CNodes maintain a reference to their parent node Id. This is how CDrive maintains the hierarchical tree structure. Parent denotes the folder relation and root node has empty parent.

Data Location

Each file CNode contains a link or URL to the data location where the actual data bytes for that file are stored (e.g., in S3). Multiple CNodes can reference the same data location (in case a CNode is used in copy operation). And if a CNode is moved its data location remains unchanged. A file CNode can be present in multiple physical locations. CDrive provides information about the transfer state of files in these locations (Unknown/Created/Available/Failed).

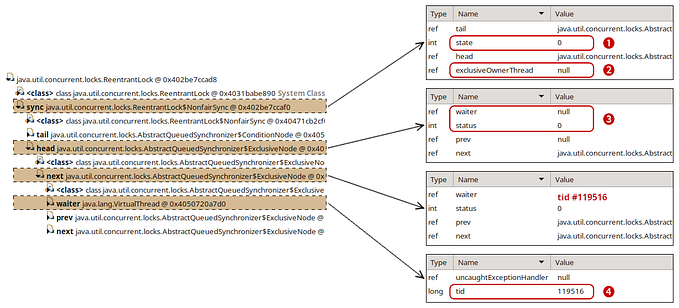

Concurrency and Consistency

CDrive allows multiple users/applications to access shared files/folders simultaneously. CDrive uses CockroachDB serializable transactions to support this and maintains the invariant of a unique file path for each node in CDrive.

An operation such as Copy or Move propagates changes to all the children in a subtree being modified. This involves updating metadata such as parent, file path, and/or partition for all nodes in that subtree.

If any operation results in a path conflict, CDrive provides a merge option to the user to decide whether to overwrite existing paths with new node information or preserve or fail the operation.

Access Control

In CDrive, authorization is driven based on the partition or workspace type, as mentioned in the workspace section. A workspace owned by an application can control access to those files/folders by integrating with authorization callbacks in CDrive. On the other hand, a user has complete control over files/folders that are part of their personal workspace.

CDrive allows users to collaborate by sharing files/folders with any set of permissions or user-based access control. If a folder or top-level CNode is shared with a set of permissions, such as read/download, then this access control applies to all the children in that subtree. CDrive also allows team folder creation for collaboration among artists in different geolocations. Changes made by one artist are visible to another based on the latest state of the folder being shared.

CDrive acts as a proxy layer for other Netflix services in the cloud because it provides user-level authorization on files and folders. For every operation, CDrive gets the initial user or application context from the request and verifies whether that user or application has the required access/permissions on the set of CNodes for that operation.

Workspace

All tree structures in CDrive belong to a unique workspace. Workspace in CDrive is an isolated file/folder logical partition. A workspace defines the authorization model, mutability, and data lifecycle for files and folders in that partition. A workspace can be of the following types.

User/Personal Workspace

User workspaces are used to store work-in-progress files per production for a user. Hence, files/folders within user workspaces are considered mutable. Data retention for all files/folders in a personal workspace is temporary, and simple purge data lifecycle policies can be applied to these temporary files once production has launched, as these temporary files won’t be needed. It uses a simple authorization model to which only that user has access. A user can provide access to these nodes through the shares feature.

Application/Project Workspace

Application or Project workspaces are used to store finalized assets that do not need further mutations. Hence, these are immutable tree structures. It uses a federated authorization model, delegating the auth to an owner application tied to that workspace. Data lifecycle policies are a bit complicated and cannot be applied at the whole workspace level here as these workspaces contain final delivery assets that need to be kept in storage forever. Data lifecycle decisions to archive or purge are taken at the individual file/folder level. We have a blog post covering the intelligent data lifecycle solution in detail here.

Shared/Team Workspace

A shared workspace is similar to a User workspace in terms of mutability. The only difference is that it is owned by CDrive and shared among users for collaboration in a project. Authorization for any files/folders under a shared workspace is based on an access control list associated with nodes. In these workspaces, data lifecycle management follows a similar principle as user workspaces. All files/folders belonging to shared workspaces are considered temporary and only kept while the show is in production.

Stats and Numbers

- As of 10/2024: CDrive is storing metadata for about 14.2+ billion files/folders

- 848k workspaces: user 70%, project: 27%, team: 3%

- Averaging ~50+ million new CDrive nodes created every week

- Total visits to ContentHub UI (built on top of Content Drive) weekly

- UI page visits by various studios and production departments further highlight the importance of Content Drive for business.

- This graph provides a quick summary of server-level Requests per step and overall P90 Latencies for the endpoints taken over a one-day window.

Future posts

We will come back with more posts on the following interesting problems we are working on at present:

- Search—CDrive has APIs to search based on file path under a partition, but we don’t have a search based on arbitrary attributes for a node. Search capability for an application or a user in a project is very useful for Machine Learning and user-facing workflows.

- Sharding — With data growing exponentially, CDrive has a new challenge of serving read queries for a container with millions of files/folders. CDrive plans to address this by adding support for sharding. The idea is to divide the huge container into multiple shards. This can improve the container retrieval cost.

- CDrive Versioning—Studio applications need the capability to support “artist’s file sessions,” where artists have access rights to view the changes that happened to files/folders in their workspaces, get change notifications, refresh the artist’s workstation, and revert to a point-in-time version. With this new requirement, CDrive needs to provide the versions/change tracking capabilities of a cloud-enabled file system.

Acknowledgments

Special thanks to our stunning colleagues Rajnish Prasad, Jose Thomas, Olof Johansson, Victor Yelevich, Vinay Kawade, Shengwei Wang